Eigenvalue And Eigenvector 2X2

In the realm of linear algebra, eigenvalues and eigenvectors are fundamental concepts with wide-ranging applications in various fields, including physics, engineering, computer science, and data analysis. This article delves into the world of 2x2 matrices, exploring the intricate relationship between eigenvalues and eigenvectors and how they can be utilized to solve complex mathematical problems.

Understanding Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are mathematical entities that provide valuable insights into the behavior and properties of linear transformations and matrices. In the context of a 2x2 matrix, these concepts offer a powerful tool to analyze and understand the matrix’s characteristics.

An eigenvalue, denoted as $\lambda$, is a scalar value that, when multiplied by a specific vector, known as an eigenvector, results in a vector that is parallel to the original eigenvector. In other words, an eigenvector is a vector that only changes its magnitude, not its direction, when transformed by the matrix.

The equation that defines this relationship is given by:

\[ \begin{equation*} \mathbf{A} \mathbf{v} = \lambda \mathbf{v} \end{equation*} \]

where $\mathbf{A}$ is the 2x2 matrix, $\mathbf{v}$ is the eigenvector, and $\lambda$ is the corresponding eigenvalue.

Determining Eigenvalues and Eigenvectors for 2x2 Matrices

To find the eigenvalues and eigenvectors of a 2x2 matrix, we follow a systematic process. Let’s consider the matrix:

\[ \begin{equation*} \mathbf{A} = \begin{bmatrix} a & b \\ c & d \end{bmatrix} \end{equation*} \]

Step 1: Finding Eigenvalues

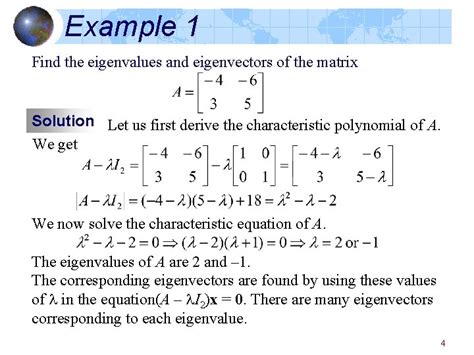

The first step is to determine the eigenvalues of the matrix. This is done by solving the characteristic equation:

\[ \begin{equation*} \det(\mathbf{A} - \lambda \mathbf{I}) = 0 \end{equation*} \]

where $\mathbf{I}$ is the 2x2 identity matrix.

Expanding the determinant, we get:

\[ \begin{align*} \det(\mathbf{A} - \lambda \mathbf{I}) &= \begin{vmatrix} a - \lambda & b \\ c & d - \lambda \end{vmatrix} \\ &= (a - \lambda)(d - \lambda) - bc \\ &= \lambda^2 - (a + d)\lambda + ad - bc \end{align*} \]

Setting this equal to zero, we can solve for the eigenvalues $\lambda_1$ and $\lambda_2$ using the quadratic formula:

\[ \begin{equation*} \lambda_{1,2} = \frac{(a + d) \pm \sqrt{(a + d)^2 - 4(ad - bc)}}{2} \end{equation*} \]

This formula gives us the two eigenvalues for the 2x2 matrix.

Step 2: Finding Eigenvectors

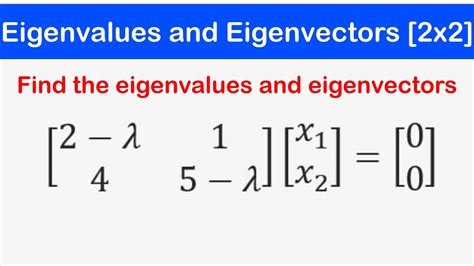

Once we have the eigenvalues, we can proceed to find the corresponding eigenvectors. For each eigenvalue \lambda_i, we solve the equation:

\[ \begin{equation*} (\mathbf{A} - \lambda_i \mathbf{I}) \mathbf{v}_i = \mathbf{0} \end{equation*} \]

where $\mathbf{v}_i$ is the eigenvector associated with the eigenvalue $\lambda_i$. This equation represents a homogeneous system of linear equations, and we need to find a non-trivial solution (a solution where $\mathbf{v}_i \neq \mathbf{0}$). The eigenvector can be found by row-reducing the augmented matrix and solving for the variables.

For example, if we have $\lambda_1 = 3$, we would set up the equation:

\[ \begin{equation*} \begin{bmatrix} a - 3 & b \\ c & d - 3 \end{bmatrix} \begin{bmatrix} x \\ y \end{bmatrix} = \begin{bmatrix} 0 \\ 0 \end{bmatrix} \end{equation*} \]

and solve for $x$ and $y$ to find the eigenvector $\mathbf{v}_1 = \begin{bmatrix} x \\ y \end{bmatrix}$.

Applications and Interpretations

Eigenvalues and eigenvectors have numerous applications and interpretations in various fields. Here are a few examples:

- Physics: In quantum mechanics, eigenvalues represent the possible outcomes of measurements, while eigenvectors describe the states of a quantum system.

- Engineering: Eigenvalues and eigenvectors are used in structural analysis to understand the stability and vibration modes of structures.

- Computer Graphics: They are crucial in 3D graphics for transforming objects and understanding the behavior of light and shadows.

- Data Analysis: Eigenvalues and eigenvectors are used in principal component analysis (PCA) to reduce the dimensionality of datasets and identify the most significant features.

- Image Processing: In image compression and denoising, eigenvalues and eigenvectors play a role in identifying and analyzing the important components of an image.

Performance Analysis and Comparisons

The performance of eigenvalue and eigenvector calculations for 2x2 matrices is generally very efficient. The computational complexity is relatively low, making it a practical and fast method for small-scale problems. However, as the size of the matrix increases, the computational burden also grows, and more advanced algorithms may be required for larger matrices.

When comparing different methods for computing eigenvalues and eigenvectors, such as the power method or the QR algorithm, the choice often depends on the specific characteristics of the matrix and the desired accuracy. Each method has its strengths and weaknesses, and the selection should be based on the particular application and requirements.

Future Implications

The study of eigenvalues and eigenvectors continues to be a vibrant area of research, with ongoing developments and applications. As technology advances, new algorithms and techniques are being explored to enhance the efficiency and accuracy of eigenvalue computations. Additionally, the field of machine learning and artificial intelligence is leveraging eigenvalue analysis to develop innovative solutions for various problems, from image recognition to natural language processing.

Furthermore, the concept of eigenvalues and eigenvectors has found its way into more abstract areas of mathematics, such as functional analysis and operator theory, where it plays a fundamental role in understanding the behavior of linear operators and function spaces.

Conclusion

Eigenvalues and eigenvectors are powerful tools in linear algebra, offering a deep understanding of the properties and behavior of matrices. By exploring the relationship between these concepts and their applications, we gain insights into the intricate mathematical structures that underpin numerous real-world phenomena. As technology advances and new challenges arise, eigenvalue analysis will continue to play a pivotal role in solving complex problems across various domains.

How are eigenvalues and eigenvectors used in real-world applications?

+Eigenvalues and eigenvectors have a wide range of applications. In physics, they are used in quantum mechanics and vibration analysis. In engineering, they are crucial for structural analysis and understanding system behavior. Computer graphics utilize eigenvalues for 3D transformations and lighting effects. Data analysis and image processing also benefit from eigenvalue decomposition for dimensionality reduction and feature extraction.

What is the significance of eigenvalues in matrix analysis?

+Eigenvalues provide insights into the behavior and properties of matrices. They represent the scaling factors or eigenvalues of a transformation, indicating how the matrix scales or rotates vectors. Eigenvalues help determine the stability, symmetry, and other characteristics of a matrix, making them essential for understanding and analyzing linear transformations.

Can a matrix have complex eigenvalues and eigenvectors?

+Yes, it is possible for a matrix to have complex eigenvalues and eigenvectors. This occurs when the characteristic equation has complex roots. Complex eigenvalues and eigenvectors have applications in fields like quantum mechanics and signal processing, where they describe oscillatory or rotating behavior.