Transition Matrix Probability

The concept of transition matrices and their associated probabilities is a fundamental tool in the realm of Markov chains and stochastic processes. These matrices provide a powerful framework for understanding and analyzing random systems that evolve over time. Whether you're studying the weather patterns of a region, the behavior of a stock market, or the movement of particles in a physical system, transition matrices offer a mathematical model to predict future states based on current and past information.

Unraveling Transition Matrices

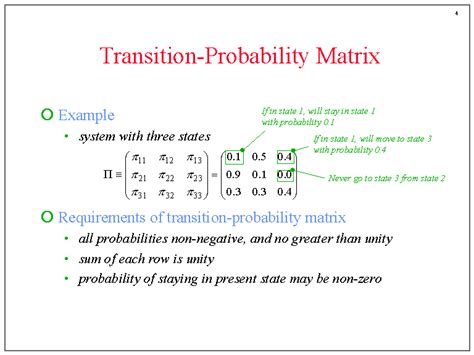

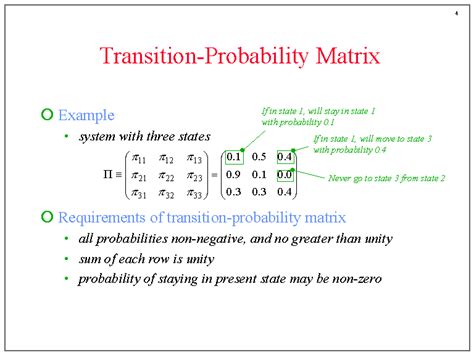

A transition matrix, in the context of Markov chains, is a square matrix where each entry Pij represents the probability of transitioning from state i to state j in one step. These matrices are often referred to as stochastic matrices because they must satisfy certain conditions to ensure the preservation of probability over multiple steps.

The beauty of transition matrices lies in their ability to encapsulate the dynamics of a system in a single matrix. This means that the future behavior of the system can be predicted by simply multiplying the current state vector by the transition matrix. Mathematically, if we have a system with n states and our current state is represented by a vector P0 of length n, then the state vector after k steps, Pk, can be calculated as:

Pk = P0 * Pk

Here, P is the transition matrix. This equation is a powerful tool, allowing us to forecast the future behavior of the system based on its initial state and the transition probabilities.

An Illustrative Example

Consider a simple weather model with three states: Sunny, Cloudy, and Rainy. The transition matrix for this system might look like this:

| Sunny | Cloudy | Rainy | |

|---|---|---|---|

| Sunny | 0.6 | 0.3 | 0.1 |

| Cloudy | 0.2 | 0.4 | 0.4 |

| Rainy | 0.1 | 0.2 | 0.7 |

In this matrix, each entry Pij represents the probability of transitioning from state i to state j in one day. For instance, the entry 0.6 in the first row and second column indicates that there's a 60% chance of a sunny day being followed by another sunny day.

Now, let's say we start our observations on a sunny day. Our initial state vector P0 would be [1, 0, 0], representing that we're definitely in the "Sunny" state with no probability in the other states. After one day, our state vector becomes:

P1 = P0 * P = [1, 0, 0] * P = [0.6, 0.3, 0.1]

This means there's now a 60% chance we're still in a sunny state, a 30% chance it's cloudy, and a 10% chance of rain. As we continue to multiply by the transition matrix, we can predict the weather for any number of days in the future.

Key Properties and Applications

Transition matrices possess several key properties that make them invaluable in various fields:

- Stationary Distribution: A stationary distribution is a probability distribution over the states of the system that remains unchanged over time. In other words, if the system is in equilibrium, the probability distribution over the states doesn't change. This distribution can be found by solving a system of linear equations involving the transition matrix.

- Absorbing States: Some Markov chains have absorbing states, which are states that, once entered, the system remains forever. Identifying and analyzing these states can provide valuable insights into the long-term behavior of the system.

- Reversible Markov Chains: In certain cases, the transition probabilities are such that the system is reversible. This means that the transition probabilities are the same in both directions. Reversible Markov chains have important applications in physics and statistical mechanics.

- PageRank Algorithm: The famous PageRank algorithm used by Google to rank web pages is essentially a Markov chain with a specific transition matrix. This algorithm revolutionized the way information is organized and accessed on the internet.

Transition Matrices in Action

Transition matrices and their associated probabilities find applications in a wide array of disciplines, including:

- Finance: In stock market analysis, transition matrices can model the movement of stock prices, helping investors predict future trends.

- Engineering: Engineers use transition matrices to model and predict the behavior of physical systems, such as fluid dynamics or electrical circuits.

- Biology: Biologists employ these matrices to study population dynamics, disease progression, and the evolution of species.

- Computer Science: From natural language processing to machine learning, transition matrices play a crucial role in various algorithms and models.

- Social Sciences: Sociologists and psychologists use transition matrices to model and predict human behavior, such as the spread of opinions or the progression of psychological disorders.

Future Implications

As our understanding of transition matrices and Markov chains deepens, new avenues of research and application continue to emerge. One exciting area is the integration of machine learning techniques with Markov models. By combining the power of artificial intelligence with the predictive capabilities of transition matrices, we can develop even more sophisticated models for a wide range of applications.

Furthermore, with the advent of big data and advanced computing technologies, researchers are now able to analyze and model complex systems with unprecedented accuracy. This opens up new possibilities for applying transition matrices to real-world problems, from optimizing supply chains to predicting the spread of diseases.

In conclusion, transition matrices and their associated probabilities provide a robust framework for understanding and predicting the behavior of dynamic systems. Their applications are vast and varied, making them a cornerstone concept in fields as diverse as finance, engineering, biology, and computer science. As we continue to explore and innovate, the future of transition matrix analysis looks bright, promising new insights and advancements.

What is a Markov chain, and how does it relate to transition matrices?

+A Markov chain is a mathematical model that describes a sequence of events where the probability of each event depends only on the state attained in the previous event. Transition matrices are a key component of Markov chains, providing the probabilities of transitioning from one state to another.

How are transition matrices calculated in practice?

+Transition matrices are often estimated from historical data. For example, if you have data on the weather over a period of time, you can calculate the probabilities of transitioning from one weather state to another. This involves counting the number of transitions and dividing by the total number of observations.

What are some challenges in working with transition matrices and Markov chains?

+One challenge is ensuring that the transition matrix satisfies the requirements of a stochastic matrix, namely that all entries are non-negative and the sum of each row is 1. Additionally, estimating transition matrices from limited data can be difficult and may lead to inaccurate predictions.